An AI engineer in Bengaluru, at one of the Global Capability Centres (GCCs), is testing a new internal LLM to automate reporting of regulations. An input with a proprietary scoring algorithm is typed in to authenticate outputs, and minutes later, bits of that code appear in an unapproved instance of the model. That one action defines the contemporary IP dilemma: LLMs are facilitating the understanding, yet to the same extent they are facilitating the revelation. Multinational companies now use global capability centers as sources of innovation. India has almost half the GCCs in the world and adds billions in value in 2024-25, which explains why protecting IP in these centers is significant, not just locally, but at enterprise levels. GCCs are allocating resources to AI: a substantial number of them report deploying GenAI with knowledge management, as well as analytics, to achieve economic gains in terms of shorter product cycles, reduced cost-per-operation, and a shorter time-to-market.

Large Language Models (LLMs) transform the usage of data: data is transferred between files and databases into prompts, model checkpoints, and derivative embeddings. The following reasons make traditional perimeter controls ineffective: A breach in one GCC can have global legal and business repercussions because these gaps are crucial for GCCs, which oversee parent company R&D, IP-intensive procedures, and client data across borders. According to recent polls, CIOs and compliance leaders are placing AI governance higher in their lists of priorities, and the majority of them mention data privacy and governance as leading concerns.

The LLM value proposition in GCCs is high: the productivity increase of the privately owned LLMs and the AI automation will lead to the minimisation of reliance on manual repetitive operations, as well as the creation of additional revenue sources through analytics and productised services. According to a consulting study, the most successful GCCs use AI to increase productivity and differentiation. This finding lends credence to the notion that GCCs that properly integrate AI will be able to realise disproportionate enterprise value. The strict data management must be weighed against the financial advantages. These advantages could be overshadowed by the loss of intellectual property, legal costs, or negative press.

A brief table that GCC leaders can use right now is presented below; it aligns frequent risks in LLM with obvious mitigation measures.

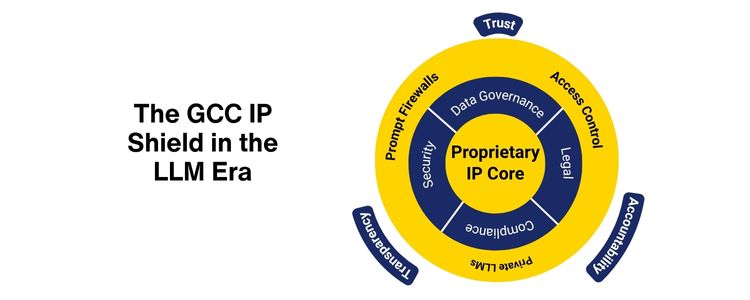

An obvious industry reaction has risen: enterprise privatised LLMs and single model instances are a new standard in GCCs where IP counts. These models function in managed environments that use on-premises or dedicated cloud tenancy. They allow for more stringent access controls, provenance tracking, and logging, and they guarantee that data stays inside the enterprise boundary. Retrieval-augmented generation (RAG) patterns are also under trial by many GCCs, which store source documents separately and query them on-the-fly instead of incorporating them into model weights.

The converging regulatory regimes are on traceability and human oversight. The Digital Personal Data Protection framework (DPDP) of India and the AI Act of the EU specify requirements for the governance of personal data and AI systems. Higher transparency and human controls over many AI systems, which are now being rolled out in phases, are mandated by the AI Act, which directly impinges on offshore GCC operations and their practice of model governance. GCCs should trace internal and external cross-border flows, as well as comply with local and international responsibilities otherwise substantial fines and compensation expenses may follow. An IP-Conscious AI Culture The IP dilemma will not be solved by only technology. To incorporate AI risk literacy into day-to-day operations, GCCs need to integrate it into the workflow: design the security of the prompt, review code to train the model, and describe playbooks to interpret suspicious outputs. Upskilling is in progress – a significant portion of GCCs demonstrate ongoing GenAI upskilling and pilots, but they need to go hand in hand with the implementation of policies and regular audits.

The prospects of AI in GCCs are not black and white. Organisations will reap the benefits of economic advantage without sacrificing intellectual differentiation if they treat LLMs as strategic resources and combine them with strict governance, privately developed model architectures, contractual transparency, and an IP-sensitive culture.

A GCC is an offshore facility of a multinational company that undertakes niche roles such as research and development, information technology service and strategic management. It is a government program that gives the women entrepreneurs up to 1 crore in bank loans to fund greenfield projects. Personal responsibilities and unconscious bias are the factors that lead to their mid-career attrition and slow them down in their careers. They introduce new ideas, understanding, and team-oriented leadership that speeds up the advancement of such areas as AI and cybersecurity. By 2030, women are expected to take up 25-30 per cent of GCC leadership positions, which will be paramount to the growth of the Indian market. Aditi, with a strong background in forensic science and biotechnology, brings an innovative scientific perspective to her work. Her expertise spans research, analytics, and strategic advisory in consulting and GCC environments. She has published numerous research papers and articles. A versatile writer in both technical and creative domains, Aditi excels at translating complex subjects into compelling insights. Which she aligns seamlessly with consulting, advisory domain, and GCC operations. Her ability to bridge science, business, and storytelling positions her as a strategic thinker who can drive data-informed decision-making.

Why LLMs Complicate IP Protection

Economic Advantages

An Operational IP-Protection System in GCCs in LLDMs

Pillar

Risk / Challenge

GCC Mitigation (practical)

Data Governance

Proprietary plus client-mixed data training data.

Sensitive, regulated, and non-sensitive tier data should never be used for external model training.

Prompt Security

Employees share confidential details in prompts

Enterprise prompt firewalls and templates, as well as redaction proxies

Model Deployment

APIs by the third party can store inputs.

Favour non-shared instances of LLM (on-cloud or on-prem), with no reuse SLAs.

Legal & Contracts

Unclear IP ownership of AI outputs

Clear IP stipulations in vendor and employment agreements; model-generated output property.

Audit & Explainability

In black-box models, provenance is obscured.

Keep auditable data provenance and datasets and fine-tune model cards.

People & Process

Low AI risk awareness

Mandatory GenAI security training, SOC playbooks for incidents

Transition to Non-Governmental LLM and Managed Ecosystems.

Regulations and Enforcement

Conclusion

frequently asked questions (FAQs)

Aditi

Hey, like this? Why not share it with a buddy?

Related Posts

Recent Blog / Post

- Pharma GCC Setup Services in India: Strategic Considerations for CXOs January 9, 2026

- Why Enterprises Are Rethinking Their GCC Strategies in 2026 January 8, 2026

- Why Most Enterprise Expansion Strategies Fall Short of Projections, And How a GCC Enabler Can Bridge the Gap January 7, 2026

- India’s GCC Ecosystem: Why the World’s Biggest Companies Are Betting Their Future on it January 3, 2026

- Healthcare GCCs in India: Where the World’s Pharmaceutical Innovation Actually Happens January 2, 2026

- Circular Economy Models and Their Relevance to Manufacturing GCCs December 30, 2025

- GCCs in Agritech: Digitizing Global Food Security December 29, 2025

- Renewable Energy GCCs: Accelerating Global Green-Tech Development December 29, 2025

- Cyber Resilience 2030: Multi-Layer Security Architecture for GCCs December 26, 2025

- Building an Integrated Risk Management Framework for Multi-Region GCCs December 26, 2025

- The Ethics of Automation: How GCCs Maintain Human Oversight in AI Workflows December 25, 2025

- Future of HR in GCCs: Data-Led, Skills-Based, and GenAI-Driven December 25, 2025

- The Proposal to Standardize India’s GCCs for Unshakeable Global Leadership December 24, 2025

- Global Capability Centers: A Strategic Growth Model for B2B Enterprises December 24, 2025

- AI Ethics & Compliance Mandates for GCC Operations in 2025 December 23, 2025